I am a third-year Ph.D student in Data Science and Analytics (DSA) thrust at HKUST, where I am very fortunately supervised by Prof. Sunghun KIM and Prof. Raymond Chi Wing WONG.

I received my master’s degree from the School of Electronic and Computer Engineering at Peking University (北京大学信息工程学院), where I was advised by Prof. Yuexian Zou. I earned my bachelor’s degree from the College of Internet of Things Engineering at Hohai University (河海大学物联网工程学院). I also work closely with Prof. Shoujin Wang from the University of Technology Sydney.

My research interest includes sequential recommendation, medical NLP and large language models. I have published 20+ papers

I am currently working on Large Language Models + RecSys/Healthcare. I am actively seeking job opportunities in both academia (faculty or postdoctoral positions) and industry. If you are interested in academic collaboration or would like to discuss potential opportunities, please feel free to email me at zhoupalin@gmail.com.

🔥 News

- 2025.05: 🚀 Our new benchmark paper “BrowseComp-ZH: Benchmarking Web Browsing Ability of Large Language Models in Chinese” is now available on ArXiv!

This is the first high-difficulty benchmark specifically built to evaluate LLMs’ real-world web browsing and reasoning abilities on the Chinese internet, spanning 289 multi-hop, verifiable questions across 11 domains.

📄 Read the paper

📂 Get the dataset on GitHub

📢 中文介绍 — 欢迎转发支持! - 2025.05: 🎉 1 paper accepted by KDD 2025. Congrats to Jian!

- 2025.02: 🧑🔧 Serving as a reviewer for CVPR 2025, ICCV 2025, and TOIS.

- 2025.01: 🎉 Milestone: Google Scholar citations exceed 1000!

- 2025.01: 🎉 1 paper accepted by WWW 2025.

- 2025.01: 🎉 1 paper accepted by JMIR. Congrats to Wanxin!

- 2025.01: 🎉 Our paper “Application of Large Language Models in Medicine” accepted by Nature Reviews Bioengineering. Congrats to Fenglin!

- 2025.01: 🎉 Our survey paper “Cold-Start Recommendation Towards the Era of Large Language Models (LLMs): A Comprehensive Survey and Roadmap” is now available on ArXiv. Read it here

- 2024.10: 🧑🔧 serve as the reviewer for ICLR2025, WWW2025 and ICASSP 2025.

- 2024.06: 🧑🔧 serve as the reviewer for AAAI2025, NeurIPS2024 and COLING 2025.

- 2024.05: 🎉 1 paper is accepted by ACL 2024. Congrats to Jian!

- 2024.01: 🎉 1 paper is accepted by WWW 2024.

- 2023.09: 🎉 Our paper “Is ChatGPT a Good Recommender? A Preliminary Study” is accepted by The 1st Workshop on Recommendation with Generative Models (organized by CIKM 2023).

- 2023.09: 🎉 1 paper is accepted by NeurIPS 2023.

📝 Publications

📊 Recommender System

🧠 LLM/LVLM for RecSys

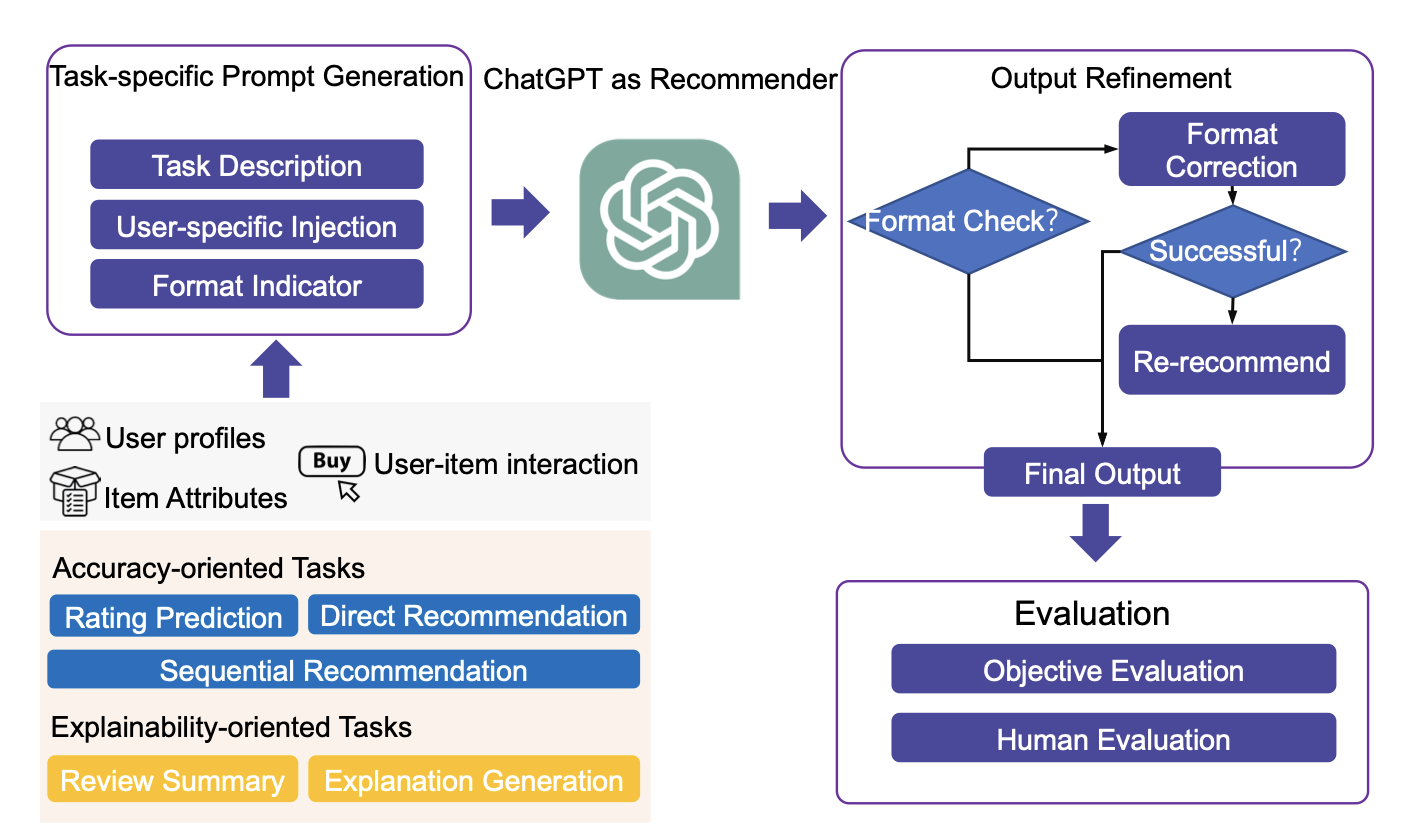

Is ChatGPT a Good Recommender? A Preliminary Study

Junling Liu, Chao Liu, Peilin Zhou, Renjie Lv, Kang Zhou, Yan Zhang.

- First work to utilize ChatGPT as a universal recommender, assessing its capabilities across five tasks.

- Provides valuable insights into the strengths and limitations of ChatGPT in recommendation systems.

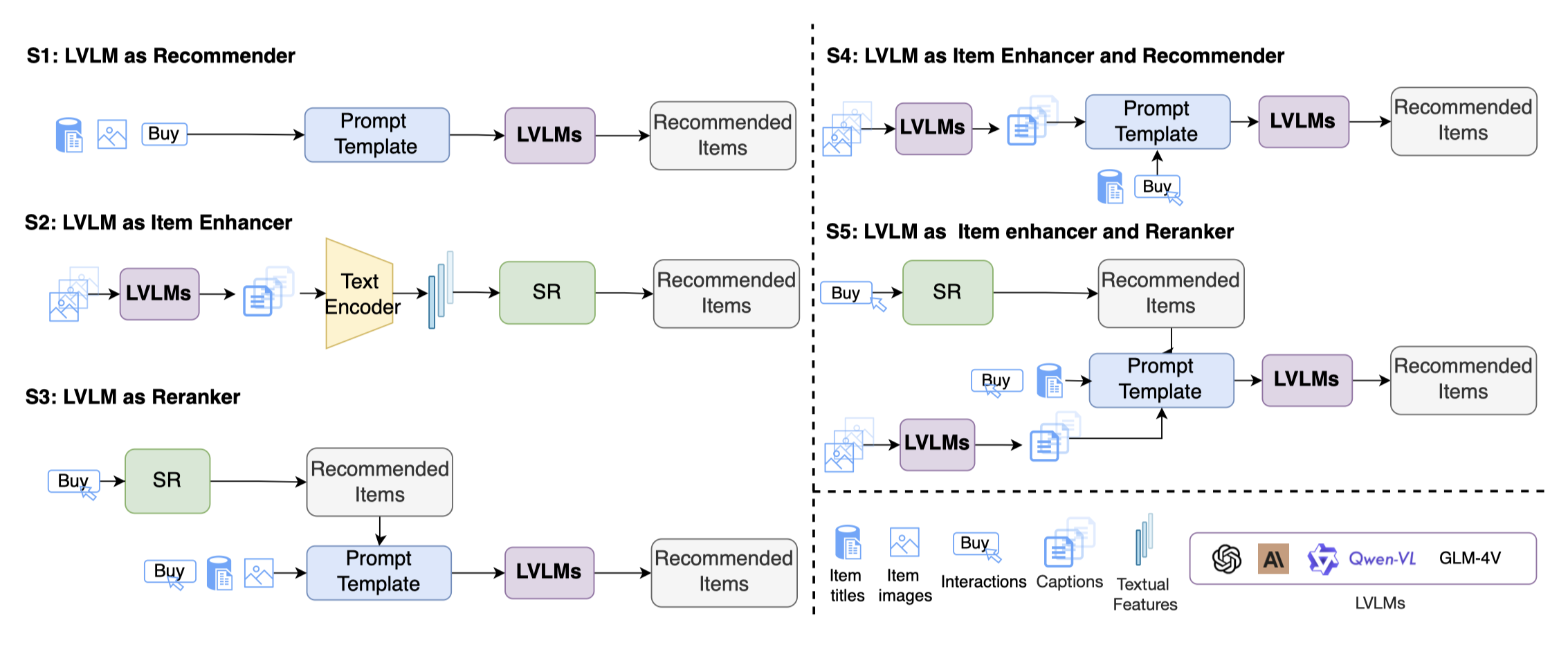

When Large Vision Language Models Meet Multimodal Sequential Recommendation: An Empirical Study

Peilin Zhou, Chao Liu, Jing Ren, Xinfeng Zhou, Yueqi Xie, Meng Cao, Zhongtao Rao, You-Liang Huang, Dading Chong, Junling Liu, Jae Boum Kim, Shoujin Wang, Raymond Chi-Wing Wong, Sunghun Kim.

- Introduces MSRBench, the first benchmark to systematically evaluate the integration of Large Vision Language Models (LVLMs) in multimodal sequential recommendation.

- Compares five integration strategies (recommender, item enhancer, reranker, and two combinations), identifying reranker as the most effective.

- Constructs the enhanced dataset Amazon Review Plus, with LVLM-generated image descriptions to support more flexible item modeling.

-

ArXivLLMRec: Benchmarking Large Language Models on Recommendation Task, Jungling Liu, Chao Liu, Peilin Zhou, et al. -

ArXivExploring Recommendation Capabilities of GPT-4V (ision): A Preliminary Case Study, Peilin Zhou, Meng Cao, Youliang Huang, Qichen Ye, et al.

🔁 Sequential Recommendation

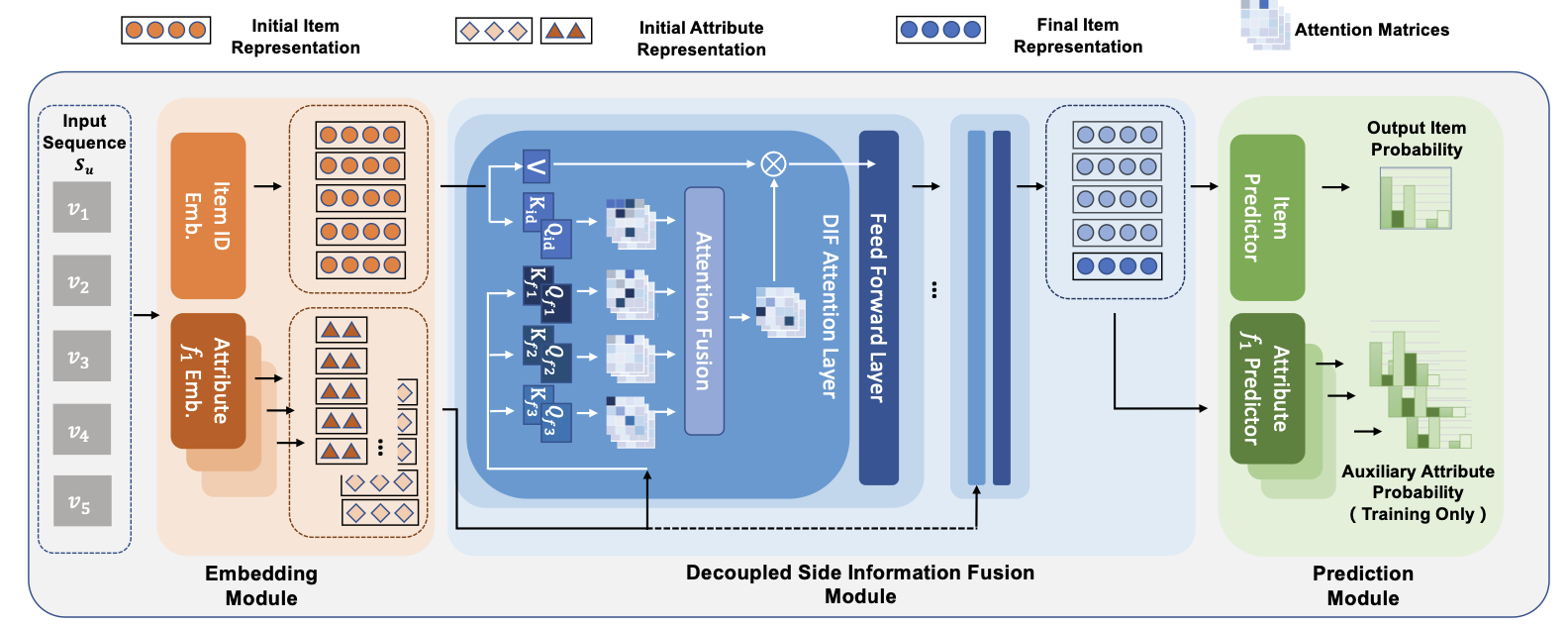

Decoupled Side Information Fusion for Sequential Recommendation

Yueqi Xie, Peilin Zhou, Sunghun Kim

- Motivation: The early integration of various types of embeddings limits the expressiveness of attention matrices due to a rank bottleneck and constrains the flexibility of gradients.

- Solution: Move the side information from the input to the attention layer and decouples the attention calculation of various side information and item representation.

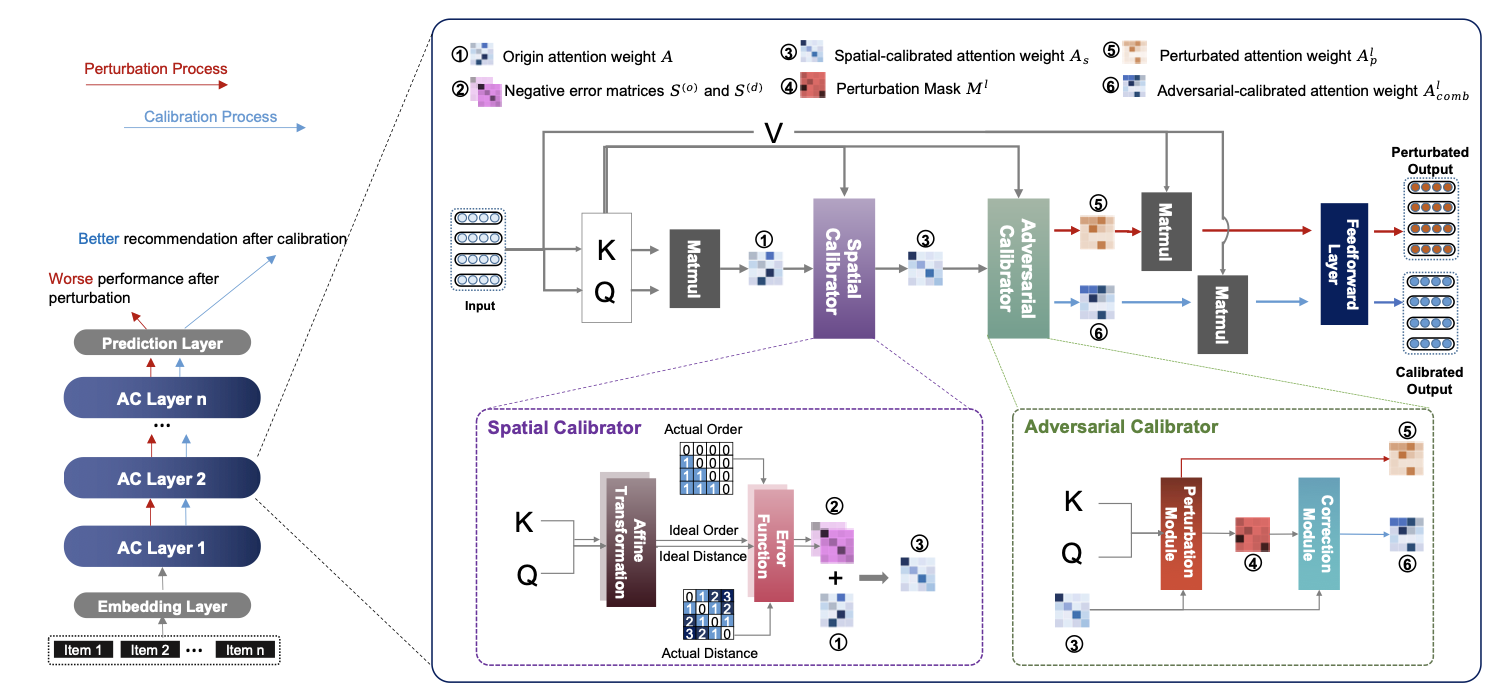

Attention Calibration for Transformer-based Sequential Recommendation

Peilin Zhou, Qichen Ye, Yueqi Xie, Jingqi Gao, and Sunghun Kim.

- AC-TSR framework can reduce the impact of sub-optimal position encoding and noisy input on the existing transformer-based SRS models with limited overhead.

- Two plug-and-play calibrators, namely spatial calibrator and adversarial calibrator, are designed to rectify the unreliable attention.

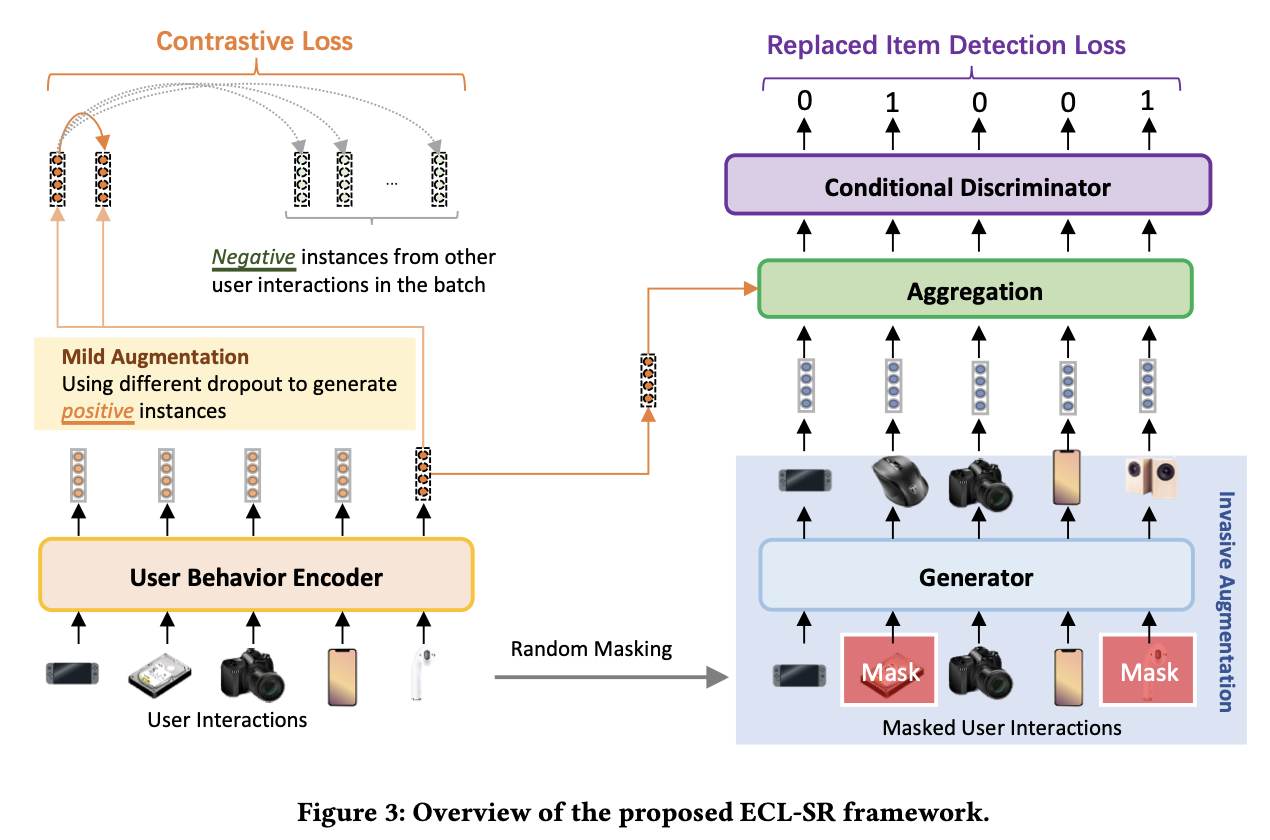

Equivariant Contrastive Learning for Sequential Recommendation

Peilin Zhou, Jingqi Gao, Yueqi Xie, Qichen Ye, et al.

- Motivation: some augmentation strategies, such as item substitution, can lead to changes in user intent. Learning indiscriminately invariant representations for all augmentation strategies might be sub-optimal.

- Solution: we propose Equivariant Contrastive Learning for Sequential Recommendation (ECL-SR), which endows SR models with great discriminative power, making the learned user behavior representations sensitive to invasive augmentations and insensitive to mild augmentations.

-

WWW 2024Is Contrastive Learning Necessary? A Study of Data Augmentation vs Contrastive Learning in Sequential Recommendation, Peilin Zhou, Youliang Huang, Yueqi Xie, et al. -

RecSys 2023Rethinking Multi-Interest Learning for Candidate Matching in Recommender Systems, Yueqi Xie, Jingqi Gao, Peilin Zhou, Qichen Ye, et al.

🏥 AI for Healthcare

📱 Medical Social Media Dataset

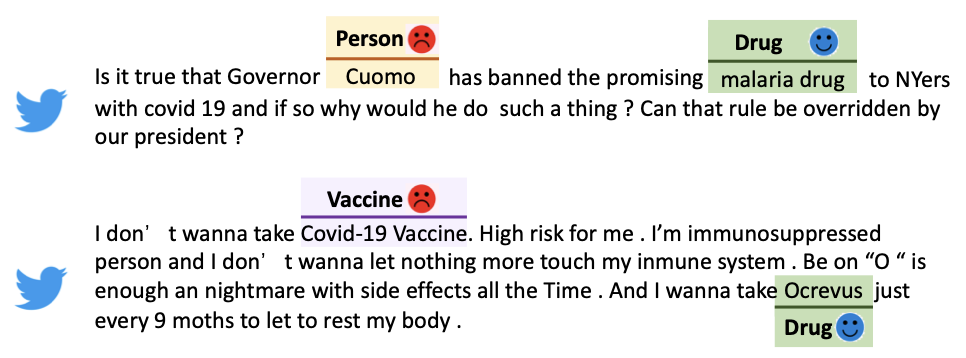

METS-CoV: A Dataset of Medical Entity and Targeted Sentiment on COVID-19 Related Tweets

Peilin Zhou, Zeqiang Wang, Dading Chong, Zhijiang Guo, et al.

- Introduce METS-CoV, the first dataset to include medical entities and targeted sentiments on COVID-19-related tweets.

- METS-CoV fully considers the characteristics of the medical field and can therefore be used to help researchers use natural language processing models to mine valuable medical information from tweets.

- Annotation guidelines, benchmark models, and source code are released for medical social medial research.

Under ReviewStreamlining Social Media Information Retrieval for Public Health Research with Deep Learning, Yining Hua, Shixu Lin, Minghui Li, Yujie Zhang, Peilin Zhou, Ying-Chih Lo, Li Zhou, Jie Yang

🧬 Large Languages Models for Healthcare

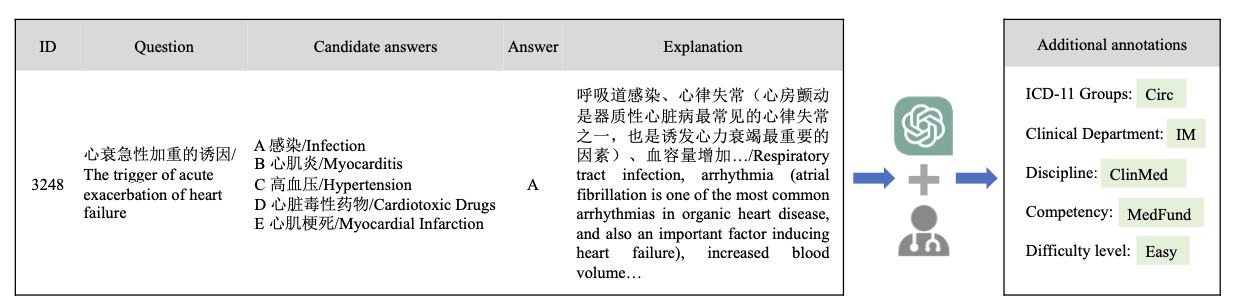

Benchmarking Large Language Models on CMExam–A Comprehensive Chinese Medical Exam Dataset

Junling Liu, Peilin Zhou, Yining Hua, Dading Chong, et al.

- We construct CMExam, a dataset sourced from the stringent Chinese National Medical Licensing Examination, featuring 60,000+ multiple-choice questions, with detailed explanations.

- This study aims to spur further exploration of LLMs in medicine by providing a comprehensive benchmark for their evaluation.

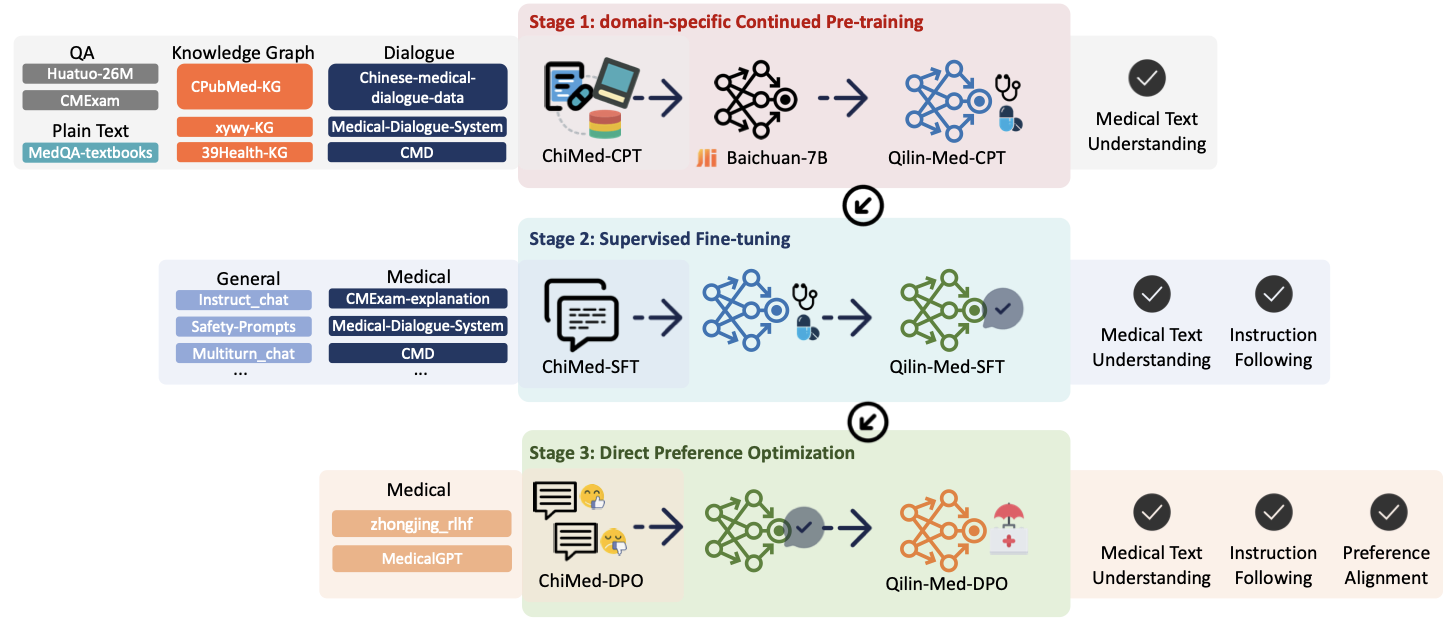

Qilin-Med: Multi-stage Knowledge Injection Advanced Medical Large Language Model

Qichen Ye, Junling Liu, Dading Chong, Peilin Zhou, et al.

- Construct the ChiMed dataset, which contains diverse data types (QA, plain texts, knowledge graphs, and dialogues).

- Develop a Chinese medical LLM called Qilin-Med via a multi-stage knowledge injection pipeline.

-

Under ReviewQilin-Med-VL: Towards Chinese Large Vision-language Model for General Healthcare, Junling Liu, Ziming Wang, Qichen Ye, Dading Chong, Peilin Zhou, Yining Hua -

Under ReviewA Survey of Large Language Models in Medicine: Progress, Application, and Challenge , Hongjian Zhou, Boyang Gu, Xinyu Zou, Yiru Li, Sam S Chen, Peilin Zhou, Junling Liu, Yining Hua, Chengfeng Mao, Xian Wu, Zheng Li, Fenglin Liu -

Under ReviewLarge Language Models in Mental Health Care: a Scoping Review, Yining Hua, Fenglin Liu, Kailai Yang, Zehan Li, Yi-han Sheu, Peilin Zhou,et al.

🌱 AI for Good

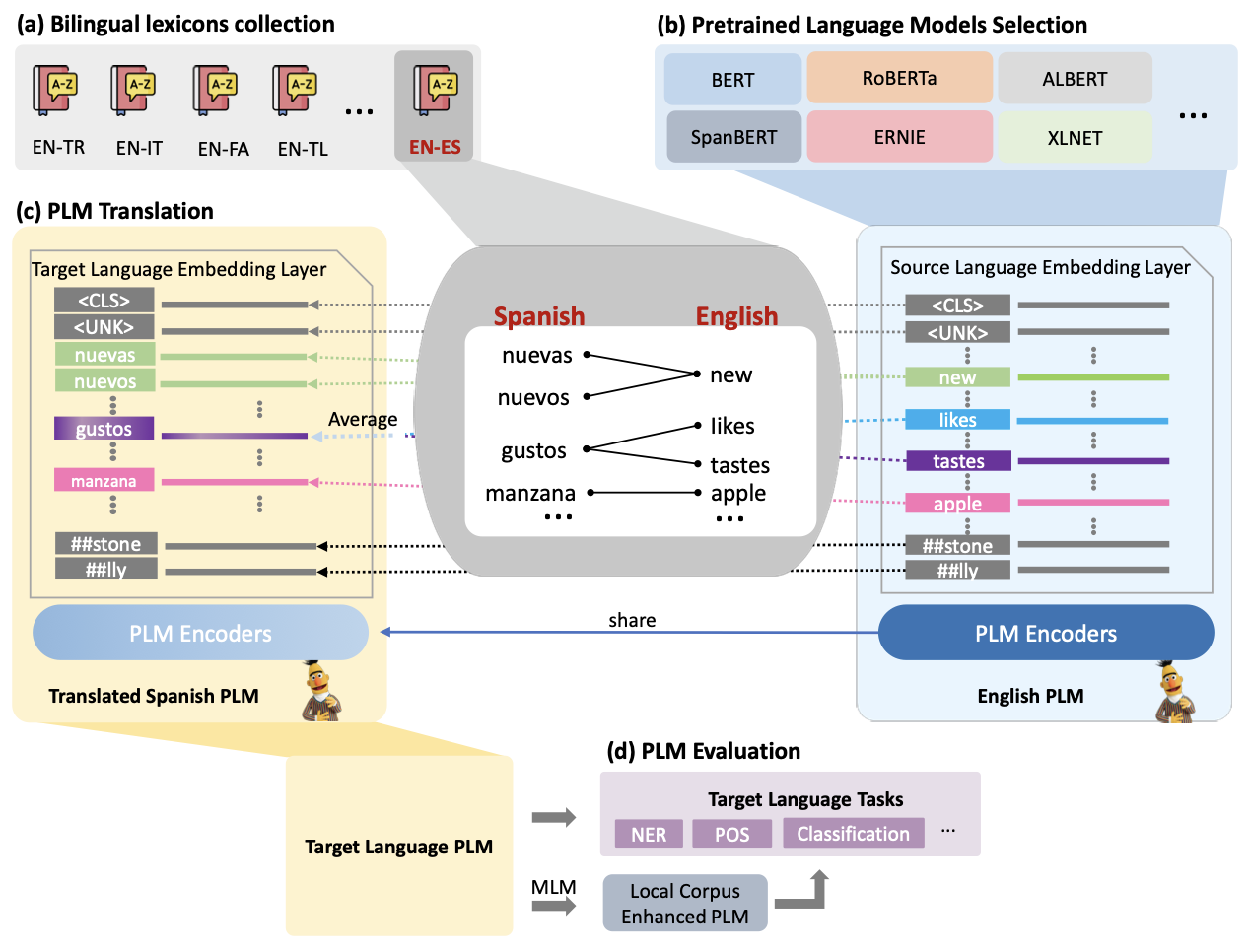

GreenPLM: Cross-lingual pre-trained language models conversion with (almost) no cost

Qingcheng Zeng, Lucas Garay, Peilin Zhou, Dading Chong, et al.

- Propose a simple, heuristic pipeline utilizing bilingual lexicons to translate a source language PLM to a target language PLM with almost no computational cost,significantly reducing carbon emissions for building foundation PLMs in various languages.

🤖 NLP Tasks and Datasets

📂 Dataset

ACL 2024FinTextQA: A Dataset for Long-form Financial Question Answering, Jian Chen, Peilin Zhou, Yining Hua, et al.

🗣️ Spoken Language Understanding

ICASSP 2022Joint Multiple Intent Detection and Slot Filling via Self-distillation, Lisong Chen, Peilin Zhou, Yuexian Zou.INTERSPEECH 2022Calibrate and Refine! A Novel and Agile Framework for ASR-error Robust Intent Detection, Peilin Zhou, Dading Chong, Helin Wang, and Qingcheng Zeng.INTERSPEECH 2022Low-resource Accent Classification in Geographically-proximate Settings: A Forensic and Sociophonetics Perspective, Qingcheng Zeng, Dading Chong, Peilin Zhou, Jie YangICASSP 2021Sentiment Injected Iteratively Co-Interactive Network for Spoken Language Understanding, Zhiqi Huang, Fenglin Liu, Peilin Zhou, Yuexian Zou.INTERSPEECH 2021Semantic Transportation Prototypical Network for Few-shot Intent Detection, Weiyuan Xu, Peilin Zhou, Chenyu You, Yuexian Zou.ICPR 2020PIN: A novel parallel interactive network for spoken language understanding, Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou.

📚 Machine Reading Comprehension

ICASSP 2021Adaptive bi-directional attention: Exploring multi-granularity representations for machine reading comprehension, Nuo Chen, Fenglin Liu, Chenyu You, Peilin Zhou, Yuexian Zou

🎙️ Audio Pretraining

ICASSP 2023Masked Spectrogram Prediction for Self-supervised Audio Pre-training, Dading Chong, Helin Wang, Peilin Zhou, Qingcheng Zeng

🎖 Honors and Awards

- 2023.10 The Third Prize of AI Hackathon (Healthcare Track) by Baichuan AI & Amazon Cloud.

- 2020.10 The Second Prize of Few-shot Spoken Language Understanding Challenge by SMP.

- 2020.10 The Second Prize Scholarship by Peking University.

- 2016.09 Academic Excellence Scholarship by Hohai University.

- 2015.05 National Scholarship by Ministry of Education of the People’s Republic of China.

📖 Educations

- 2022.09 - 2025.07 (Expected), Ph.D. in Data Science Analytics, HKUST.

- 2018.09 - 2021.7, M.Sc. in Computer Applied Technology, Peking University.

- 2013.09 - 2017.7, B.Eng. in Telecommunication Engineering, Hohai University.

🧑🔧 Academic Services

- Reviewer (or PC Member): TKDE, TOIS, KDD 2025, ICLR 2025, ICCV 2025, WWW 2025/2024, CVPR 2025/2024, AAAI 2025/2024, NeurIPS 2025/2024/2023/2022, EMNLP 2023, ACL 2025/2024/2023, ICASSP 2024/2023/2021, COLING 2023/2022, Interspeech 2021, Neural Computing and Application

💻 Internships

- 2022.07 - 2022.09, Reseach Assistant, supervised by Sunghun KIM, Hong Kong University of Science and Technology.

- 2021.07 - 2022.08, Reseach Assistant, supervised by Jie Yang, School of Medicine, Zhejiang University.

- 2021.05 - 2022.07, AI Researcher, Upstage.

- 2021.07 - 2022.08, Visiting Student, The Chinese University of Hong Kong (Shenzhen).